How to Prevent Hallucinations in Artificial Intelligence Agents

October 7, 2025 By Yodaplus

Artificial Intelligence (AI) systems, especially Agentic AI and Generative AI, have transformed how we design autonomous agents and intelligent systems. However, their flexibility and creativity also come with a challenge, hallucinations. These are instances where AI generates incorrect or ungrounded information. For developers building AI agents, preventing hallucinations is essential to ensure accuracy, reliability, and user trust.

This blog explores why hallucinations occur in autonomous AI, how to detect them, and what best practices can help reduce their occurrence using Artificial Intelligence solutions and reliable AI frameworks.

What Are Hallucinations in AI Agents?

Hallucinations in Generative AI refer to responses that are not supported by real data or logical grounding. In the context of Agentic AI, hallucinations may lead to false reasoning, wrong task execution, or unsafe actions.

These can be categorized into two types:

-

Minor Hallucinations: Slight deviations or creative inaccuracies that do not cause harm.

-

Major Hallucinations: Critical errors that mislead users or cause incorrect autonomous actions in AI workflows.

For example, a workflow agent summarizing reports could create false data references if not connected to verified sources.

Why Do Hallucinations Occur in Autonomous Agents?

Several factors contribute to hallucinations in AI systems and intelligent agents:

-

Data Gaps: If the underlying dataset lacks relevant information, the LLM or AI model may fill in gaps with assumptions.

-

Ambiguous Prompts: Poorly structured instructions during prompt engineering can lead to ungrounded responses.

-

Model Complexity: Large Neural Networks and Deep Learning models are probabilistic, meaning they predict likely outcomes rather than guaranteed truths.

-

Stale Knowledge Bases: Without frequent updates, even advanced knowledge-based systems can cause outdated or irrelevant outputs.

As machine learning models rely heavily on statistical patterns, ensuring clear boundaries and grounding sources becomes key to minimizing hallucinations.

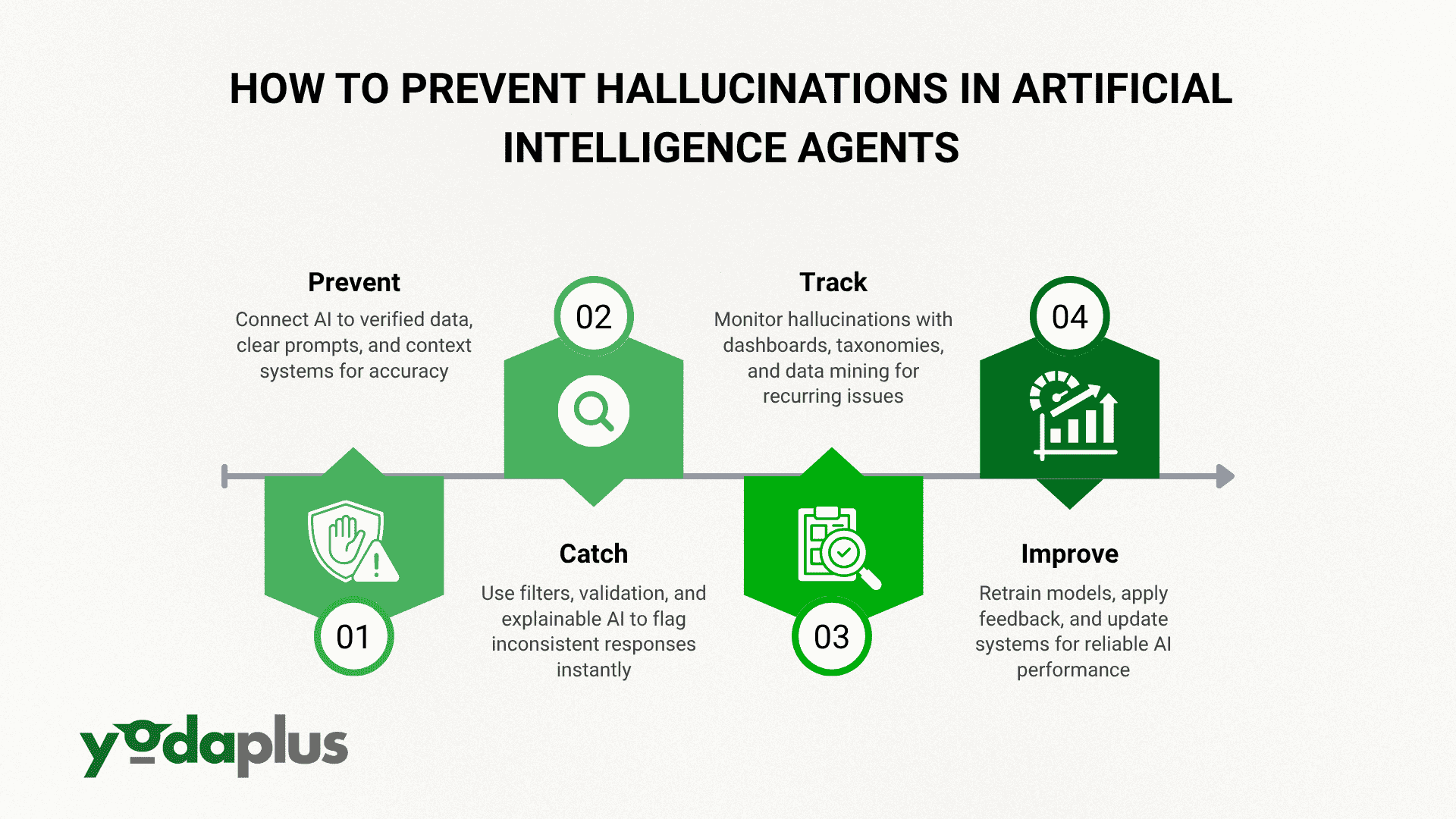

Preventing Hallucinations: The Four-Pillar Approach

To make autonomous systems more dependable, organizations can adopt a four-pillar approach — Prevent, Catch, Track, and Improve.

1. Prevent: Grounding AI Responses in Data

The best prevention method is ensuring that AI agents rely on verified and current data.

-

Use Knowledge Bases: Connect agents to structured knowledge-based systems for factual grounding.

-

API Integrations: Allow Generative AI to fetch real-time data for dynamic and accurate responses.

-

Context Windows: Enable MCP (Model Context Protocol) or vector embeddings to maintain relevant context during task execution.

-

Prompt Clarity: Craft precise prompts that reduce ambiguity during AI model training.

These practices make AI technology more context-aware and less likely to generate unjustified responses.

2. Catch: Identifying Hallucinations in Real Time

Even with preventive mechanisms, some errors slip through. To detect them early:

-

Input Filters: Prevent irrelevant or unsafe prompts using rule-based filters.

-

Output Validation: Use classification models to check if a response is grounded in retrieved data.

-

Explainable AI: Implement AI-powered automation tools that highlight how and why a model generated a specific output.

For instance, Crew AI frameworks can analyze conversation patterns or AI applications in logistics to flag inconsistencies before deployment.

3. Track: Monitoring Hallucinations Post-Deployment

Continuous tracking helps refine AI agents in production. Organizations can:

-

Maintain a hallucination taxonomy that records the type, source, and impact of every error.

-

Use monitoring dashboards powered by AI-driven analytics to identify trends.

-

Apply data mining on historical outputs to find recurring ungrounded patterns.

Tracking provides insights for both developers and supervisors, ensuring long-term accountability and better Responsible AI practices.

4. Improve: Continuous Learning and Feedback Loops

Improvement is an ongoing process. Every autonomous agent must evolve through:

-

Regular Retraining: Updating models using new datasets improves factual grounding.

-

Human Oversight: Experts reviewing AI in business operations can catch edge cases that automation might miss.

-

Feedback Loops: Gathering user feedback helps refine prompts, rules, and data connections.

-

Safe Model Updates: Periodic model evaluations ensure that AI frameworks align with enterprise reliability goals.

Over time, these steps make autonomous AI systems more adaptive and aligned with real-world logic.

Designing Reliable AI Systems for the Future

Preventing hallucinations is not about over-restricting AI creativity but ensuring that responses are explainable, grounded, and consistent. As Agentic AI evolves, techniques like semantic search, multi-agent systems, and AI agent software will play a major role in building reliable, goal-aligned autonomous systems.

At Yodaplus Artificial Intelligence Solutions, we focus on designing AI systems that balance autonomy with accountability, ensuring every response is grounded, auditable, and aligned with user intent.

The future of Artificial Intelligence in business depends on our ability to balance innovation with control, making AI agents not just powerful but also trustworthy and transparent.