Fine-Tuning vs Prompt Engineering for Internal Tools

July 9, 2025 By Yodaplus

The use of Large Language Models (LLMs) in business operations is growing fast. From Financial Technology Solutions and Supply Chain Technology to ERP platforms and Document Digitization, AI is being embedded into internal tools to improve decision-making, reduce manual effort, and enable smart automation.

But once you choose an LLM, how do you make it work for your specific use case?

Two common strategies are fine-tuning and prompt engineering. While both help tailor the model’s behavior, they serve different purposes, require different resources, and come with different risks.

In this blog, we will compare fine-tuning and prompt engineering, especially in the context of AI-powered internal tools, and help you decide which one fits your needs.

What Is Fine-Tuning?

Fine-tuning is the process of training a pre-trained LLM on a custom dataset to specialize it for your business. You provide examples of how you want the model to behave, and it learns from them. The model parameters are adjusted to reflect the patterns in your data.

Use case:

A company wants an AI assistant to answer questions using internal policy documents and past customer interactions. They collect hundreds of real Q&A pairs and fine-tune the base model.

Pros:

- High accuracy on niche or repetitive tasks

- Better memory of business-specific language or workflows

- Can adapt well to internal datasets like ERP logs, support transcripts, or compliance checklists

Cons:

- Expensive and time-consuming

- Requires ML expertise and infrastructure

- Risk of overfitting or forgetting original knowledge

Fine-tuning is commonly used in Smart contract development, Credit Risk Management Software, and AI solutions that require deep understanding of internal processes.

What Is Prompt Engineering?

Prompt engineering is about designing better instructions or input formats to guide the LLM’s output. Instead of modifying the model, you give it better context and structure.

Use case:

A team uses a general-purpose LLM to extract invoice data. Instead of training it, they write a prompt like:

“Extract item name, price, tax, and total from the following scanned invoice text: [insert data]”

Pros:

- Fast and cost-effective

- No model training needed

- Easy to update as business rules change

Cons:

- Less consistent results across large-scale workflows

- Limited memory of past interactions

- Needs trial-and-error for optimal performance

Prompt engineering works well in tools like GenRPT, where you generate reports from SQL or Excel files using plain language. It is also a key technique in Artificial Intelligence services focused on document summarization or query handling.

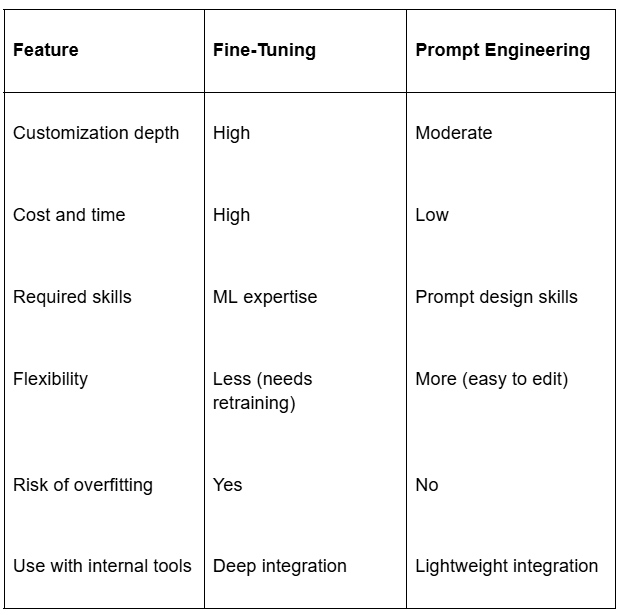

Key Differences: Fine-Tuning vs Prompt Engineering

For internal systems like Retail inventory systems, Warehouse Management Systems (WMS), or custom ERP dashboards, the choice often depends on how frequently tasks change and how specific the language or logic is.

When to Use Fine-Tuning in Internal Tools

Use fine-tuning when your tasks are:

- Repetitive and high-volume

- Use domain-specific terminology

- Require consistent formatting

- Have limited variation in query types

Examples:

- Classifying customer support tickets

- Filling digital forms from scanned logistics documents

- Analyzing transaction histories in treasury management systems

In these cases, fine-tuning helps the model internalize structure and vocabulary. Once trained, it can perform with high precision even on complex documents like customs declarations, credit agreements, or compliance forms.

When to Use Prompt Engineering in Internal Tools

Use prompt engineering when your tasks are:

- Ad-hoc or exploratory

- Vary by user or department

- Pull from multiple document types

- Need flexibility and fast deployment

Examples:

- Asking for trends in supply chain delays

- Extracting terms from scanned contracts

- Creating quick summaries from ERP logs

Prompt engineering is ideal when building Agentic AI tools that work across departments and change tasks often. You can guide behavior by adjusting prompts, system instructions, or formatting examples.

This is also useful for Digital Document workflows, where AI reads varied formats like PDFs, scanned images, and emails, and the structure of input changes daily.

Combining Both Approaches

In many cases, a hybrid strategy works best. You can:

- Fine-tune a base model with examples from your business

- Use prompt engineering to adjust for user-specific needs

For example, you might fine-tune a model to understand your internal terminology and ERP schema. Then, allow users to interact with it using prompts for different reporting needs.

This is common in Supply Chain Optimization, where historical data is used to fine-tune the agent, and new constraints or policies are handled with real-time prompts.

Best Practices

Whether you’re fine-tuning or writing prompts, here are a few best practices:

- Use representative data

Make sure your fine-tuning or prompt examples reflect real use cases. Include edge cases and noise. - Ground the model in business context

Connect your model to internal knowledge bases, inventory management systems, or compliance documents for better reliability. - Test with real workflows

Simulate how users interact with your AI tool. Focus on explainability and traceability. - Review security and governance

Ensure sensitive data in training or prompts is protected and follows enterprise policies. - Monitor performance over time

Business processes evolve. Regularly update prompts or retrain fine-tuned models to stay accurate.

Final Thoughts

Fine-tuning and prompt engineering are both valuable strategies to adapt LLMs for internal tools. The right choice depends on your budget, technical resources, task complexity, and how fast your internal workflows change.

- Use fine-tuning for depth, consistency, and scale.

- Use prompt engineering for speed, flexibility, and control.

At Yodaplus, we help enterprises integrate AI into their internal systems, from custom ERP modules to Agentic AI workflows, using the right mix of fine-tuning and prompt design. Whether you’re optimizing supply chains, digitizing finance workflows, or automating document handling, we ensure your AI works the way your business does.