Debugging Agentic AI: Use Logs to Improve Coordination

November 18, 2025 By Yodaplus

Coordination is one of the hardest parts of working with agentic AI. When multiple AI agents operate inside multi agent systems, they share tasks, exchange messages, and complete workflow agents activities. If one agent fails, delays, repeats work, or misunderstands instructions, the entire process is affected. Agent logs help teams see what happened inside the system and fix coordination failures with clearer insights.

Modern agentic AI supports business operations, AI applications, data processing, knowledge based systems, and AI powered automation. These systems rely on machine learning, NLP, LLM models, and advanced prompt engineering. They support tasks in logistics, digital retail solutions, and information retrieval. With more complexity, organizations need better observability and stronger monitoring of AI agents.

Agent logs give a detailed history of reasoning steps, prompts, model responses, tool usage, and error messages. When used correctly, they improve reliable AI outcomes and support responsible AI practices.

Why coordination failures happen in agentic AI

Coordination failures appear when autonomous agents do not share information correctly or when actions are not aligned with expected workflows. Common causes include:

-

Incomplete or unclear task definitions

-

Missing context or outdated data

-

Tool calling errors

-

Recursive or repetitive loops

-

Slow or blocked responses from external systems

-

Weak prompt design or missing constraints

These failures impact results in autonomous AI, AI in logistics, AI in supply chain optimization, and sector tasks such as document processing or even review of ship documents in maritime operations.

Agent logs give teams the ability to identify these points of failure. Logs show if one agent sent a wrong instruction, if a query was lost, or if a model generated incorrect data. This supports AI risk management and improves agentic AI capabilities.

What agent logs include

Agent logs vary by agentic framework or platform. Many agentic AI platforms and agentic AI tools include:

-

timestamps

-

execution traces

-

prompt history

-

tool inputs and outputs

-

model tokens and embeddings

-

error messages and retries

-

final result and decision path

In platforms like Crew AI, teams can track reasoning and message flow. With MCP, teams use structured exchange protocols that make logs easier to analyze. Comparing MCP vs LangChain or Autogen vs LangChain helps teams choose the right environment. Some teams review mcp use cases or LangChain vs MCP comparison to select an AI agentic framework.

How agent logs help fix coordination failures

1. Better root cause analysis

Logs provide evidence of what went wrong. Instead of guessing, developers can search the data. This improves AI driven analytics and supports reliable AI results.

2. Clearer communication across agents

When multi agent systems fail, logs show which messages were not delivered. This helps teams update routing logic and improve workflow reliability.

3. Stronger explainable AI

Logs show reasoning steps and help humans understand how an agent reached a conclusion. This supports explainable AI and improves trust in Artificial Intelligence in business.

4. Faster debugging and prompt improvement

Prompt engineering is more effective when logs reveal token usage, model behavior, and misunderstanding between agents.

5. Better safety checks

These support safety reviews across sectors like retail, finance, supply chain, and maritime operations. They protect organizations using agentic AI applications and automated tasks.

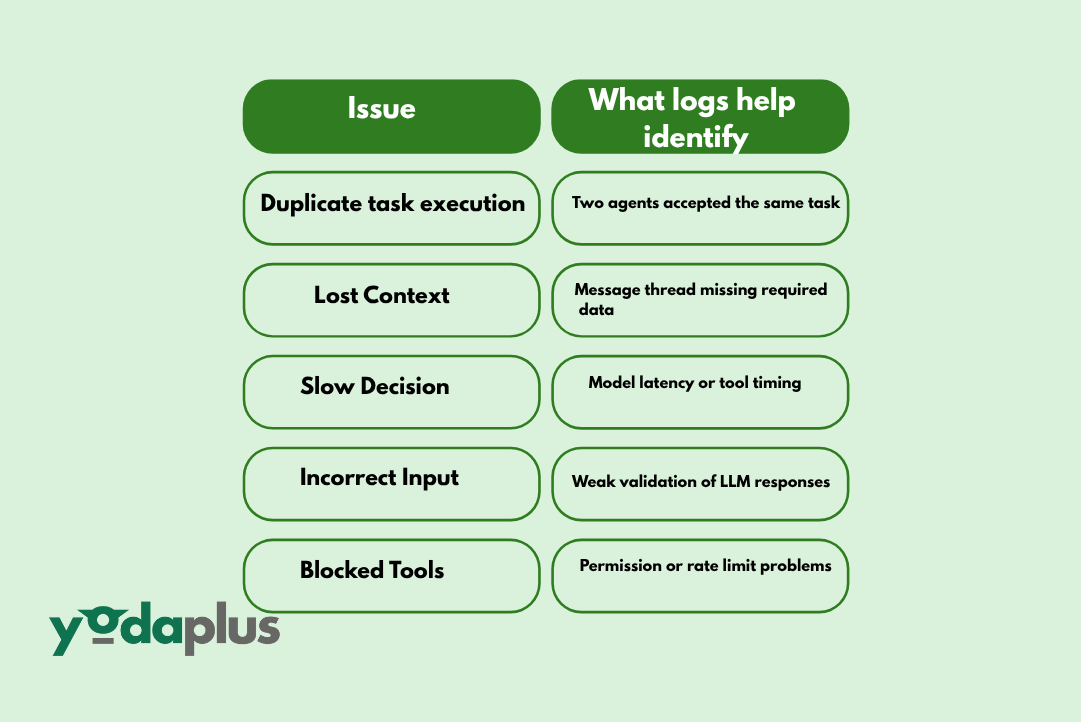

Example coordination issues agent logs can fix

Logs improve outcomes in AI models, generative AI software, self supervised learning, deep learning, and data mining tasks.

Best practices for using agent logs

-

Store logs securely with proper access controls

-

Use standard formats for easier processing

-

Add metadata for faster searching

-

Apply semantic search or vector embeddings to analyze logs

-

Integrate monitoring dashboards

-

Reviews after major workflow updates

-

Use automated alerts for repeated patterns

Future of agent log observability

As autonomous agents grow, observability will become part of every ai system. Future platforms will offer:

-

real time trace visualization

-

memory timelines for intelligent agents

-

policy alignment checks

-

continuous evaluation with model performance tracking

-

self healing workflows

This will support the future of AI and more reliable agentic AI use cases across industries.

Conclusion

Agent logs help teams understand how AI agents think, act, and collaborate. They make it easier to locate coordination failures, improve decision paths, and enhance responsible AI practices. As organizations adopt agentic AI frameworks, logs will become a core element of monitoring, evaluation, and reliable automation.

By using agent logs, companies support better Artificial Intelligence in business, safer implementations, and more confident adoption of agentic AI across operations.